Concerns Arise Over Fine-Tuning of Large Language Models

In the rapidly evolving field of artificial intelligence, the practice of fine-tuning large language models (LLMs) for knowledge injection has come under scrutiny. Recent insights from TLDR AI highlight that this approach may not only be ineffective but could also have detrimental effects on the models' capabilities.

Potential Risks of Fine-Tuning

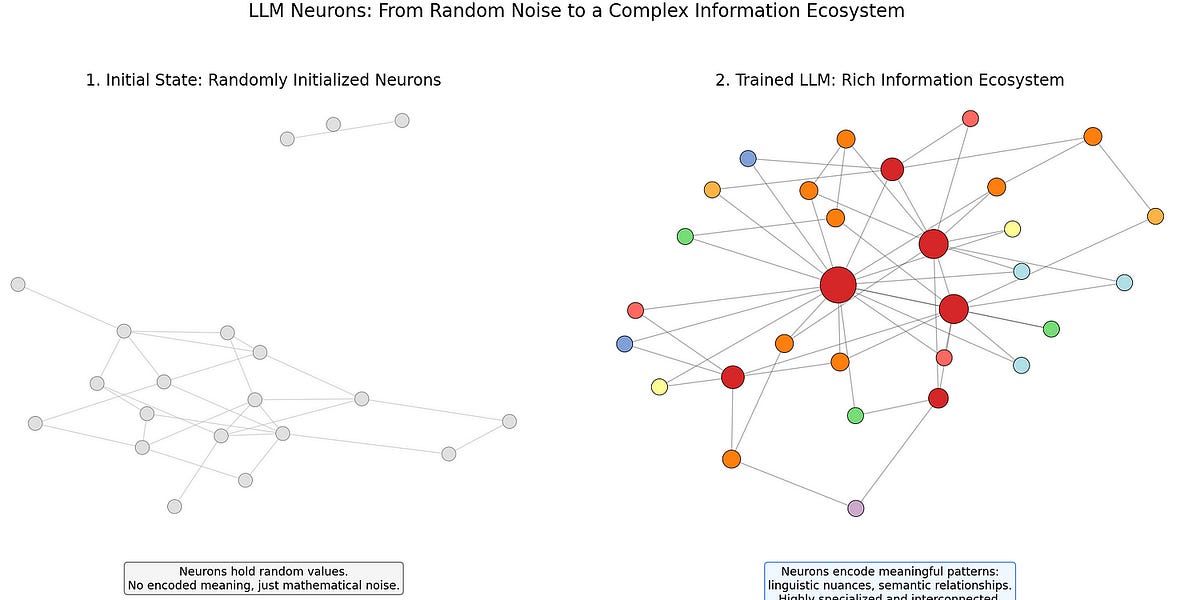

According to the analysis, updating the neurons within these advanced models can lead to the unintentional overwriting of valuable existing knowledge. This process raises significant concerns regarding the integrity of the information these models provide.

- Knowledge Overwrite: Fine-tuning could erase previously acquired data, leading to inaccuracies.

- Unintended Consequences: The changes made during fine-tuning might produce unforeseen results, diminishing the model’s overall reliability.

These findings suggest that the current methodologies employed in fine-tuning may not align with the goal of enhancing AI performance, but rather could compromise it. The implications for developers and organizations relying on these technologies are profound, indicating a need for a reevaluation of strategies surrounding model training and deployment.

Conclusion

As the AI landscape continues to advance, understanding the limitations and potential pitfalls of model fine-tuning will be crucial for professionals in the field. Stakeholders are encouraged to consider these insights seriously to avoid the repercussions of misguided adjustments to their AI systems.

Rocket Commentary

The scrutiny around fine-tuning large language models (LLMs) reflects a growing awareness of the complexities inherent in AI development. While the intention behind knowledge injection is to enhance model performance, the risks highlighted by TLDR AI are critical for developers and businesses to consider. The prospect of overwriting existing knowledge poses a real challenge, potentially leading to inaccuracies that can erode trust in these powerful tools. However, this is not a call to abandon fine-tuning altogether; rather, it emphasizes the need for a more nuanced approach. By adopting strategies that preserve core competencies while selectively enhancing capabilities, developers can mitigate risks and harness the transformative potential of AI responsibly. This could lead to the creation of more robust models that serve users effectively, driving innovation while ensuring ethical standards are upheld. In this dynamic landscape, vigilance and thoughtful experimentation will be key to unlocking AI's full potential for businesses and society at large.

Read the Original Article

This summary was created from the original article. Click below to read the full story from the source.

Read Original Article